Invitation to the 2024 Asia Privacy Bridge Forum

Recent advancements in AI technology have accentuated the growing importance of data governance and privacy, while also highlighting the need for international cooperation.

The 13th Asia Privacy Bridge Forum, inconjunction with Privacy Global Edge, will convene under the theme "International Collaborations in Trustworthy AI Governance and Privacy." This forum offers a unique opportunity for learning and growth in your respective fields. It aims to engage in profound discussions on global collaborative strategies to build a happier society in the AI era. The myriad of ethical issues surrounding data protection and privacy, particularly when intertwined with artificial intelligence technologies, necessitate proactive cooperation among nations to strike an equilibrium between technological progress and regulation, thus fostering corporate innovation.

Consequently, the 13th Asia Privacy Bridge Forum will go beyond the mere exchange of knowledge pertaining to personal information protection. It will serve as a platform for a thorough analysis of the changes and impacts that artificial intelligence technology will have on various aspects of our lives, including work, education, entertainment, and politics. Furthermore, it will provide an opportunity to collectively generate innovative ideas and collaborative measures across these domains.

We are confident that your active participation will make a substantial contribution to establishing a forum for discussions that will shape a better future through the 13th Asia Privacy Bridge Forum.

Beomsoo KIM

Director, Barun ICT Research Center

Keynote Speeches

Navigating the Future: AI Governance and Data Privacy

in the Philippines - A Regulatory Perspective

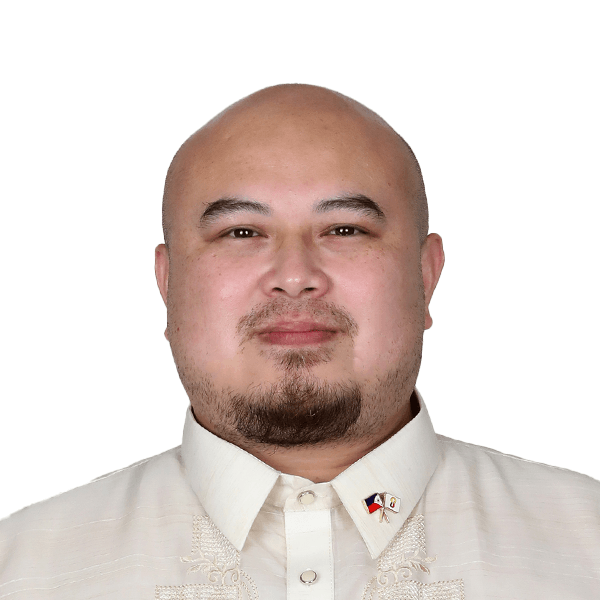

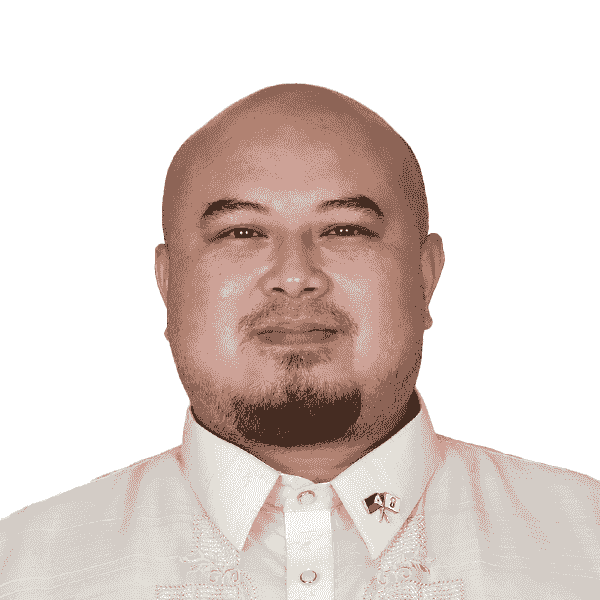

Ivin Ronald D.M. Alzona

Executive Director, National Privacy Commission,

Republic of the Philippines

Summary of Discussion

DAY 1

Session 1: Navigation Gen AI and

Trustworthy AI Governance for the Future

Moderator : Beakcheol Jang (Yonsei Univ., Korea)

Singapore's Evolving Approach to AI Governance

Jason Grant ALLEN

Associate Professor, Singapore Management University, Yong Pung How School of law, Singapore

This presentation explores Singapore's evolving AI governance framework, highlighting the

country's strategic approach to balancing innovation with public trust and safety. As one of

the most AI-ready jurisdictions globally, Singapore has positioned AI as a key driver of its

economic development while adopting a collaborative and risk-based governance model.

The discussion covers key initiatives such as the Model Framework for AI Governance, AI

Verify Toolkit, and the National AI Strategy (NAIS 1.0 and 2.0), focusing on the alignment

between government, industry, and research in building a robust AI ecosystem.

The presentation also delves into Singapore’s "soft-touch" regulatory approach, which

emphasizes voluntary standards and quasi-regulation, while comparing it with more rules-

based models such as the EU’s and China's. Special attention is given to sector-specific

AI governance in finance, through the FEAT Principles and Veritas Toolkit, and technology-

specific governance for generative AI, addressing issues like content provenance, safety,

and AI for the public good.

Additionally, Singapore's role in shaping regional AI governance through ASEAN and its

global influence in international AI forums are discussed. The future outlook considers the

potential shift toward more formal regulation as emerging technologies evolve and the need

for sustained public trust and collaboration in AI governance.

Data Protection, Competition, and AI Governance: The Importance of Data Portability and ADM Governance in Data Protection Laws

Qing HE

Assistant Professor, Beijing University of Posts and Telecommunications, China

This presentation addresses the complexities of data use policies and their effects on

competition, particularly focusing on how these policies may hinder or promote competitive

dynamics. Although certain data transfer policies, such as those enabling data portability

rights, have the potential to enhance competition, the practical implementation of these

policies often falls short in fostering competitive markets.

The presentation also delves into the governance of Automated Decision-Making (ADM)

and its relationship with broader AI governance frameworks. Under data protection laws in

both China and the EU, individuals are granted the right to challenge algorithmic decisions

that have a significant impact on them, highlighting the role of ADM governance in AI

regulation. Key aspects explored include legal definitions, protection policies, and liability

rules. A comparative analysis of ADM governance across the EU, the United States, and

China is provided, including the scope and definition of automated decision-making, its

effect on individual rights, and how ADM governance intersects with policies on Generative

AI. Relevant legislation such as the EU’s AI Act, the U.S. Blueprint for an AI Bill of Rights,

Biden’s Executive Order, and China’s Personal Information Protection Law and related

algorithmic provisions are examined in this context.

Finally, the presentation emphasizes the importance of risk classification in AI systems,

with a particular focus on legal practices in China. Three case studies are used to illustrate

the significance of this issue: credit scoring systems within financial services, price

discrimination in online services, and electronic surveillance and management systems in

workplace environments. These examples demonstrate the critical need for a structured

approach to identifying and mitigating risks within AI systems across various sectors.

Designing Accountable Community in the Emerging AI period

Kohei Kurihara

CEO, Privacy by Design Lab, Japan

This presentation focuses on delivering key insights to the design community and

emphasizing accountability in the process of developing AI services and products. In line

with the emerging AI trend in society, AI developers and providers are increasingly expected

to take on responsibility, especially as regulatory and societal demands on the supply side

rise in the coming decades.

To address this challenging theme, the discussion highlights the crucial role the design

community plays in enhancing safety and accountability in relationships between diverse

stakeholders. Additionally, by sharing effective knowledge and experiences, the community

can prevent unexpected consequences by integrating different perspectives and insights

early in the process.

The community comprises various experts and practitioners, deepening mutual literacy and

occasionally leveraging their work through "connecting the dots" via project collaborations.

These projects strengthen the trusted networks among parties that share a similar vision,

contributing to the community’s goals.

These are the main topics in this presentation. The necessary action in the emerging AI

period to prevent the unexpected consequences Multi-stakeholder based accountability

model by sharing diverse experiences and methods Learning and Sharing community

function to leverage community member synergies in the projects Designing the vision and

roadmaps with diverse backgrounds beyond the cultures and histories Finding the remarks

of community benefits against the AI harms As a conclusion, the presenter will show future

affection with community based authentic relationship building from his past methodology

and containing the actionable planning to design community network. And he will speak

about the future community design to boost the designing opportunities in multilateral

Asian approaches.

Session 2: Reconciling Data Protection and Competition Laws in the Age of AI

Moderator : Hayoung Kim (Yonsei Univ., Korea)

Taking Stock: Data Protection, Privacy, and Competition Law

Orla Lynskey

Professor, University College London, Faculty of Laws, UK

Data protection and competition law have historically been treated as distinct fields of law

with clearly demarcated boundaries, and there has been significant resistance to breaking

down these boundaries. Nevertheless, legal and technical developments (such as Apple's

use of a privacy defense to defend against allegations of abuse of market power) mean

that their intersection is now inevitable. This presentation maps out and critically analyzes

four ways in which these areas of law influence one another. First, data protection law is

not neutral—its application (or lack of application) affects market dynamics in a way that

is relevant to competition law. Second, data protection is integrated into competition law

analysis as part of the consumer welfare benchmark. Third, competition considerations

influence the interpretation of some data protection concepts, such as consent, and the

extent of data protection interferences. Finally, the legislature recognizes this intersection

by imposing limitations on the data processing activities of digital gatekeepers, subject to

data protection law.

Reproduction of Personas with AI and the Right of Publicity

Kunifumi Saito

Associate Professor, Faculty of Policy Management, Keio University, Japan

This presentation examines the relationship between the personality rights and the right of

publicity in the context of the reproduction of personas using artificial intelligence.

In the United States, most lawyers consider the right of publicity to be a type of intellectual

property right like copyright. Recently, however, an argument has emerged that emphasizes

the similarities with the right to privacy. It classifies the functions of the right into four

categories: the Right of Performance, the Right of Commercial Value, the Right of Control,

and the Right of Dignity. It is significant that the similarity between the Right of Commercial

Value, which is the core of the function, and the trademark right has been pointed out.

Meanwhile, in 2012, the Japanese Supreme Court positioned the right of publicity as a

kind of personality right. However, the official commentary to the decision emphasizes

the similarities between the right of publicity and copyright. And in practice, disputes over

the right of publicity are assigned to the specialized divisions for intellectual property of

the courts. In addition, the case law of the lower courts distinguishes between the right

of publicity and the rights of personality that relate to moral damages, such as the right of

privacy and the right of likeness.

Under Japanese law, personal rights cannot be inherited. For this reason, it is believed that

a celebrity's right of publicity also ceases upon his or her death. In this presentation, we will

examine the legal rights involved in the reproduction of the persona of the deceased using

artificial intelligence. In our discussion, we will draw on a theory from the United States that

focuses on the similarities between the right of publicity and trademark law.

Personal Data & Generative AI

DaeHee Lee

Professor, Korea University, Law School, Republic of Korea

The presentation addresses Korea's personal data regime and its related issues concerning

AI development. Specifically, it focuses on the recently released "Guidelines on Processing

of Personal Information Publicly Available for the Development and Deployment of AI

Models" by Korea's Personal Data Protection Commission. The presentation argues that

personal data concerns should not serve as obstacles to AI development.

Session 3: Digital Shield: Safeguarding Privacy

and Data for Vulnerable Users

Moderator : Hyojin Jo (Yonsei Univ., Korea)

Challenges for Non-digital Natives to Protect the Rights of Digital Natives

Byungsoo Jung

Director, Children's Rights Division, The Korean Committee for UNICEF, Republic of Korea

In 1989, Tim Berners-Lee proposed the concept of hypertext called the World Wide Web

(WWW). That same year, the UNGA unanimously adopted the UN Convention on the Rights

of the Child (CRC). The WWW and CRC may not seem to have any direct connection, but

the publication of these two documents has had a profound impact on life, especially for

children.

Children have traditionally been marginalized and viewed as a labor force, parental property,

etc. However, the CRC affirmed that children are subjects of rights. Digital technology has

also brought about significant changes in the expansion of children's rights. Educational

materials available online support children's 'self-directed learning,' and 'distance learning'

ensures equal educational opportunities for vulnerable children. It also facilitates social

participation. Therefore, children are referred to as digital natives.

In response to the growing influence of digital technology, the UN Committee on the

Rights of the Child (the Committee) issued "General Comment No. 25 on Children's Rights

in Relation to the Digital Environment" in 2021. Children from around the world expressed

concerns that while digital technology is an indispensable tool in their lives, it exposes them

to the risk of violence, abuse, misinformation/disinformation, and the collection of personal

information, which can lead to further risks. The Committee urges all States Parties to

protect children from harmful content, all forms of violence in the digital environment,

respect and protect children's privacy, and regulate advertising and marketing in digital

services that are inappropriate for children.

UNICEF, the only agency explicitly mandated by the CRC, is also committed to protecting

children's rights in the digital environment. It has established a strategic framework

for online child protection and seeks collaboration from various stakeholders, including

governments, businesses, caregivers, educators, and children. It is also moving quickly to

provide direction for emerging technologies such as AI guidance.

UNICEF calls on all stakeholders to make choices and take actions that put children at the

center. This is similar to how traffic lights and laws were created to bring order to roads that

had become chaotic and dangerous with the increase of cars. The difference is that 'child

centered' approaches are built in from the start to reduce trial and error.

Children and AI: Key Issues to Consider to Empower and Protect Them

Steven Edwin Vosloo

Policy Specialist, Digital Engagement and Protection, UNICEF Innocenti, Italy

This presentation provides a detailed framework for pseudonymizing unstructured data, critical for privacy and AI applications. Starting with an introduction to the importance of

pseudonymization in today’s data-driven landscape, it outlines key methodologies for

handling sensitive information in formats like images, videos, and free text.

Practical applications across fields such as healthcare, security, and AI development are

presented, illustrating real-world benefits and challenges. The presentation concludes with

a step-by-step approach to pseudonymization—spanning preparation, risk assessment,

processing, and management—designed to foster responsible and compliant data usage in

an evolving regulatory environment.Safeguarding and Empowering Vulnerable Children in the Digital Age: Save the Children's Global Initiatives

Jeffrey DeMarco

Senior Advisor, Protecting Children from Digital Harm, Save the Children's Global Safe Digital Childhood Initiative, UK

This presentation explores Save the Children’s comprehensive efforts to protect and

empower vulnerable children online through three key initiatives.

First, the Safe Digital Childhood Coalition addresses online protection challenges in the

Global South, where inadequate regulations expose children to online risks. Notable examples

include the development of Sri Lanka's National Action Plan, aligned with WeProtect Global

Alliance recommendations, and the SaferKidsPH program in the Philippines, which combats

online sexual exploitation and abuse.

Second, the organization promotes digital literacy and inclusive online safety education

through initiatives such as the IT for Learning/DIGITAL project in India and Indonesia,

and a cyber safety campaign led by Save the Children Australia across Pacific nations, in

collaboration with Facebook.

Finally, Save the Children is leveraging technology to tackle online harms with innovative

approaches, including an AI-powered project in India aimed at preventing online violence, a

collaboration with NetClean to detect abuse materials on corporate devices, and the Cloud

Chaos mobile game developed in Cambodia. Together, these programs highlight a global

strategy to safeguard children and empower them as responsible digital citizens.

DAY 2

Session 4: Platform Governance and AI Accountability

Moderator : Jongsoo Yoon (Attorney, Lee & Ko, Republic of Korea)

Meta's Approach to Responsible AI

Raina Yeung

Director of Privacy and Data Policy, Engagement, APAC at Meta, Singapore

With the rapid evolution of AI technology, including Generative AI, it is essential for

different stakeholders to ensure that its development and deployment are responsible and

transparent. This presentation shares Meta's experience in AI developments, including

the latest introduction of Llama 3.1 and how Meta built AI responsibly. By using these

products as examples, we aim to emphasize the importance of an open-source approach

to benefits for safety, security, competition, and innovation in AI developments and explain

how our approach to responsible AI has continued to guide us in addressing hard questions

around issues such as privacy and security, fairness and inclusion, robustness and safety,

transparency and control, and accountability and governance.

Responsible AI in Malaysia: The Role of Data Protection Policy

Jillian Chia

Attorney, SKRINE, Malaysia

This presentation focuses on the AI landscape in Malaysia, particularly the regulatory

environment and proposed plans to regulate AI, as well as the challenges Malaysia faces in

this area. Additionally, the discussion covers laws that impact the implementation of AI in

Malaysia, such as the country’s personal data protection and cybersecurity regimes.

Regulatory Landscape for Generative AI in Japan: Insights and Outlook

Hitomi Iwase

Attorney, Nishimura & Asahi, Japan

Japan's approach to regulating Generative AI is characterized by a soft law framework,

while existing laws (such as the Act on the Protection of Personal Information (APPI),

the Copyright Act, etc.) apply to the development or use of Generative AI depending

on the industry or the nature of the AI. In April 2024, the Ministry of Internal Affairs and

Communications and the Ministry of Economy, Trade and Industry issued the "AI Guidelines

for Businesses." These guidelines provide 10 guiding principles, including fairness,

transparency, and accountability, as well as practical guidance for AI developers, providers,

and users.

This presentation also covers the legal issues surrounding Generative AI, such as potential

violations of the APPI and copyright infringement, and examines what platforms need to do

to manage the risks associated with developing and providing Generative AI.

Session 5: What is Data Sovereignty?

Global Cross-border Privacy Rules (GCBPRs)

and Cooperation in Investigation and Enforcement

Moderator : Kwangbae PARK (Attorney, Lee & Ko, Republic of Korea)

South Korea's Regulatory Framework for Cross-Border Data Transfer Policies

Jeongsoo LEE

Deputy Director, Personal Information Protection Commission, Republic of Korea

In this presentation, you can expect a comprehensive introduction to Korean legislation concerning cross-border data transfers. It begins with a brief historical overview of the

legislative framework, followed by an explanation clarifying the scope and application.

Additionally, the presentation details the amended legislation enacted in September 2023,

which enhances the mechanisms for safe cross-border transfers. This includes provisions

for certification and equivalency recognition, which form part of Korea's adequacy system.

Furthermore, the presentation explores potential future developments in cross-border

transfer regulations, considering the increasing global demand for such transfers.Data Sovereignty in Vietnam: Legal Requirements, Enforcement Trends, and Global CBPRs Interactions

Huyen-Minh Nguyen

Senior Associate, BMVN International LLC, Vietnam

Imposing data localization requirements is one way Vietnam asserts its sovereignty

over data. The first data localization requirement was introduced in Vietnam under the

Cybersecurity Law of 2016, which broadly applies to all offshore and onshore enterprises

providing services on the Internet and processing certain data generated by and pertaining

to service users in Vietnam. However, due to a lack of guidance from local authorities and

the absence of a legal mechanism to enforce it, the requirement remained unenforceable

for years. In 2022, the Government issued Decree 53 to clarify the data localization

requirements under the Cybersecurity Law of 2016. Decree 53 significantly limits the cases

in which companies are required to localize their data in Vietnam, with different sets of

triggering conditions applying to offshore and onshore enterprises.

This presentation discusses the requirements of the Cybersecurity Law of 2016, Decree

53, and enforcement trends over the last few years. It also explores several new regulations

that attempt to introduce additional cross-border data restrictions, such as the Data Law

and the draft decree guiding the Law on Telecommunications, and how these may interact

with or hinder the application of Global Cross-border Privacy Rules in Vietnam.

Global Cross-Border Transfers: A Comparative Analysis of China, Hong Kong, and Beyond

Dominic Edmondson

Special Counsel, Baker McKenzie, Hong Kong

This presentation focuses on the challenges of enabling cross-border data flows while

complying with data sovereignty laws. First, the discussion covers how conflicting laws

across countries can complicate data transfers and analyzes data localization requirements

in various countries, such as China. Next, it analyzes the effectiveness of Global Cross

Border Privacy Rules (GCBPRs) in facilitating cross-border data transfers and explores the

challenges of achieving widespread adoption, using the APEC Cross-Border Privacy Rules

(CBPR) system as an example. The presentation examines its role in enabling secure data

flows while protecting privacy and provides examples of how this system has been used by

participating countries.

Session 6: Fair Use of Data

Moderator : Byungnam Lee (Senior Advisor, Kim & Chang, Republic of Korea)

Exploring Utility and Privacy in Synthetic Data

Joseph Hyuntae Kim

Professor, Yonsei University, Department of Applied Statistics, Republic of Korea

Synthetic data is becoming increasingly popular as a valuable resource for data-driven decision-making and machine learning, particularly in contexts where privacy and data

security are paramount. However, creating synthetic data requires a careful balance

between utility—ensuring the data remains useful—and privacy, aimed at safeguarding

sensitive information from exposure. This presentation delves into these two key aspects of

synthetic data and illustrates them through an auto insurance example. Additionally, insights

from industry experience as the CEO of a synthetic data startup are shared to provide

practical perspectives.

Contact Us

Barun ICT Research Center

50 Yonsei-ro, Seodaemun-gu, Seoul 03722, Korea

Tel : +82-2-2123-6694

Email : barunict@barunict.kr